API Exploitation and Investment: These bots likely leverage X's API, either through costly legitimate access or unauthorized means, indicating significant resource investment aimed at algorithmic manipulation. Their rapid interaction with posts suggests sophisticated operations.

Algorithmic Manipulation: By liking posts instantly, these bots trigger X's algorithms to flag content as spam, reducing its visibility. This manipulation highlights flaws in spam detection, where the system inadvertently penalizes legitimate content.

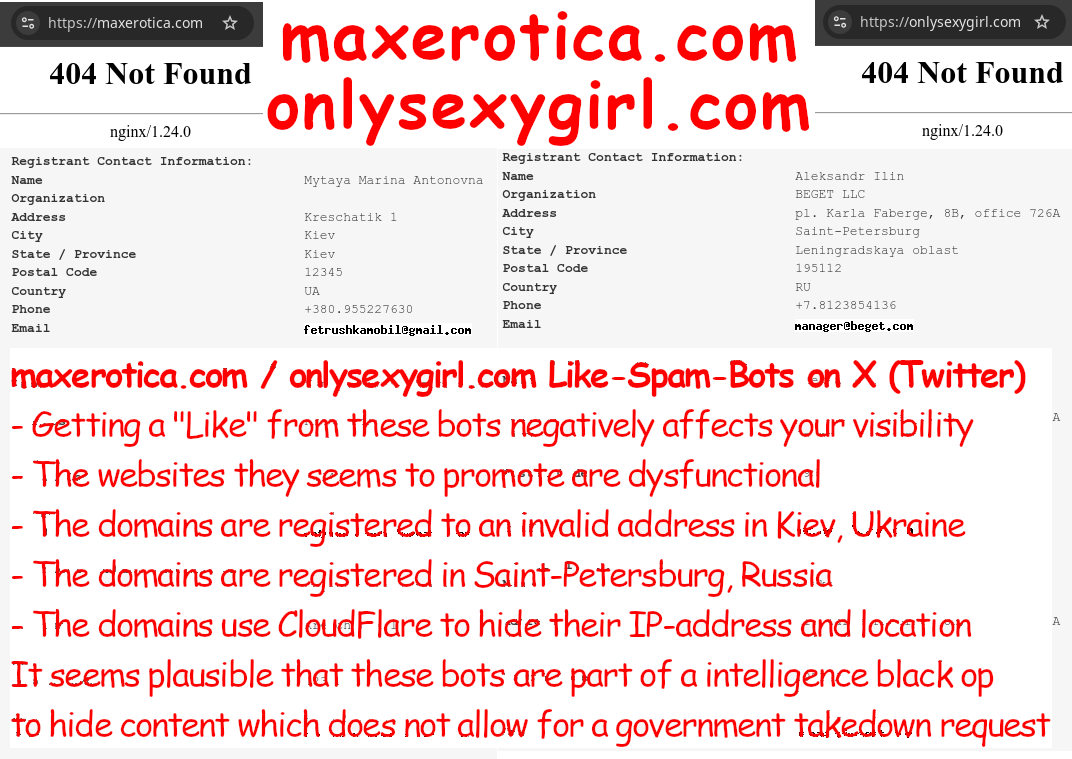

Policy Enforcement Gaps: X's response to user reports often denies policy violations by these bots, despite their deceptive practices. This discrepancy questions the clarity and application of X's policies, suggesting inadequate resource allocation or policy thresholds for action.

User Impact: The visibility of user content is severely compromised, impacting personal and professional online engagement. This situation underscores the need for X to refine its algorithms to distinguish between genuine and manipulated content.

Broader Implications: The scenario illustrates the challenge of maintaining platform integrity against economic-driven manipulation. It calls for more transparency from platforms on how they detect and mitigate such activities, alongside user empowerment to counteract these effects.

Need for Policy and Algorithmic Evolution: There's a clear need for policy reassessment and improved algorithms that do not inadvertently become tools for content suppression. Community involvement and possibly industry collaboration are necessary to address these threats to digital trust and integrity.