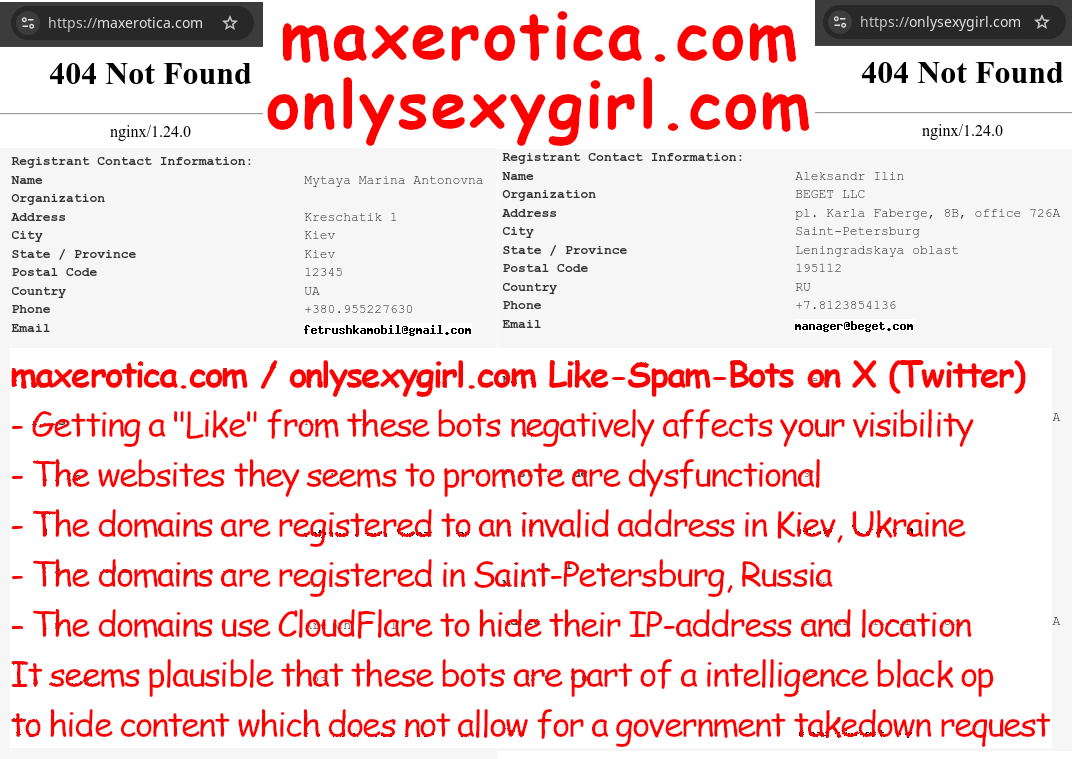

- **API Exploitation and Investment**: These bots likely leverage X's API, either through costly legitimate access or unauthorized means, indicating significant resource investment. Their rapid interaction with posts suggests sophisticated operations, potentially aimed at broader manipulation.

- **Algorithmic Manipulation**: By liking posts instantly, these bots trigger X's algorithms to flag content as spam, reducing visibility. This could serve dual purposes: manipulating visibility and indirectly targeting specific groups.

- **Creating a Hostile Environment**: The bots might be designed to disproportionately target or appear to target Arabs/Muslims, who might have cultural or religious objections to pornography. Even though the linked websites are defunct, the initial impression from the profile and links might still provoke discomfort or offense, contributing to a hostile online environment.

- **Cultural Misinformation**: If these accounts are perceived as associated with Arab or Muslim communities due to the nature of the imagery or the implication of the links, it can perpetuate stereotypes or misinformation, fostering an environment of hostility or misunderstanding.

- **Policy Enforcement Gaps**: X's response to user reports often denies policy violations by these bots, despite their deceptive practices. This raises questions about policy clarity and application, especially in cases where cultural sensitivities might be at play.

- **User Impact**: The visibility of user content is compromised, but there's also the potential for targeted harassment or the creation of a negative online experience for specific groups. This situation underscores the need for X to refine its algorithms to distinguish between genuine and manipulated content, considering cultural contexts.

- **Broader Implications**: Beyond simple spam, this scenario could be part of a strategy to manipulate perceptions, inflame cultural divides, or even serve political agendas by creating friction. It highlights the need for platforms to be vigilant about content that might not just spam but also manipulate social dynamics.

- **Need for Policy and Algorithmic Evolution**: There's a clear need for policy reassessment to address not only spam but also the cultural impact of content. Algorithms should be sensitive to how content might be perceived by different cultural groups, ensuring they don't inadvertently amplify hostility. Community involvement and industry collaboration are crucial to address these multifaceted threats to digital trust, integrity, and social harmony.